AI Solutions

Mature Clinical Labeling Models and Language Excellence

Reduce rework and approval latency to enable faster, scalable, and more predictable study start-up.

Case Study: Multilingual Retail Marketing

New AI Content Creation Solutions for a Sports and Apparel Giant

- RESOURCES

Why Choose AI Post-Editing?

Get significantly faster, scalable, and cost-effective multilingual content delivery — at levels previously not possible.

Organizations with ambitious growth goals face mounting pressure to produce multilingual content faster, at scale, and with greater cost efficiency. Traditional translation methods often create bottlenecks, making it challenging to keep pace with global demand and maintain quality across all languages and regions. For many, this means translation backlogs, limiting their ability to reach new audiences and adapt quickly to evolving market needs.

AI post-editing (automated post-editing) is transforming this landscape. By combining machine-generated translations — using Neural Machine Translation (NMT) or Retrieval-Augmented Generation (RAG) — with advanced and agentic AI post-editing prompt chains and targeted human oversight, we are dramatically accelerating content delivery and reducing costs — unlocking new levels of speed and scalability previously unattainable.

Lionbridge’s AI post-editing solution meets a variety of content needs, with configurable workflows for different languages, industries, and quality expectations. This innovative approach helps companies:

- Overcome translation obstacles.

- Maintain expected quality.

- Expand their global reach.

How Lionbridge’s AI-Post Editing Solution Works

AI post-editing harnesses advanced Large Language Models (LLMs) and advanced agentic prompt chains purpose-built to refine machine-generated translations. By automating much of the post-editing process, AI post-editing dramatically reduces human effort — allowing experts to focus their attention on targeted content that requires specialized review or validation.

Here’s an overview of Lionbridge’s solution, which incorporates AI Post-Editing.

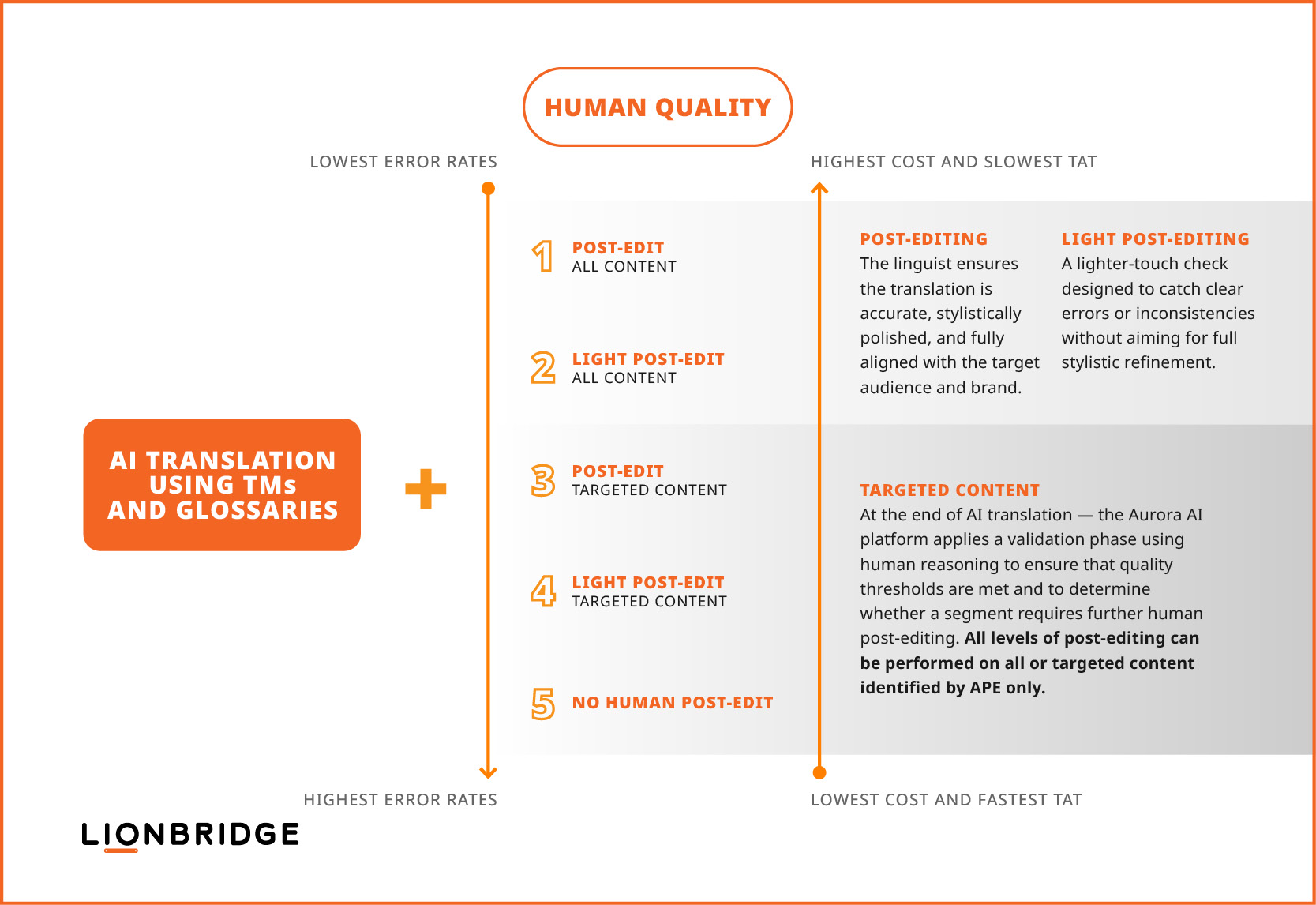

AI-Driven Service Levels: Varying Degrees of Human-in-the-Loop for Every Content Need

Let your content profile, goals, and budget guide the level of human intervention you choose for quality evaluation.

Why Choose Lionbridge’s AI-First Translation Solution?

Lionbridge’s advanced agentic localization method reduces human effort with a customizable translation process.

The best MT solution for translation and superior frontier LLMs for AI decisioning and editing

No lengthy or costly NMT or LLM training

LLM decisioning based on the content

Integration of language assets (TMs, glossaries, and style guides)

Customization of prompts for your tone of voice and brand

Fuzzy match LLM post-editing

Advanced content validation based on human reasoning

“We offer an outcome-based approach, rather than a one-size-fits-all solution. With a sliding scale of human involvement, you can choose the right balance for your content needs — and measure quality with quantifiable defect rates. For many languages and content types, even higher error rates remain well within acceptable bounds.”

—Simone Lamont, Lionbridge VP of Global Solutions

The Benefits of Lionbridge’s Automated Post-Editing Solution

Translate more. Get it faster. Spend less — while maintaining quality.

Content Scalability

Translate more than you ever thought possible; expand your content reach across languages and markets with a solution designed for high-volume, ongoing localization.

Speed

Accelerate multilingual content delivery to unprecedented levels, dramatically reducing time-to-market for global launches and enabling simultaneous release of content in multiple languages.

Cost Efficiency

Experience lower translation costs than you ever have, with AI post-editing workflows delivering high-quality output at a fraction of traditional localization costs.

Quality

Achieve reliable, quality translations through advanced AI and varying levels of human validation to ensure accuracy, tone, and brand consistency. Human-in-the-loop involvement is essential for specialized content and low-resource languages, while many projects require less review. Our TRUST framework promotes AI trust, and our REACH framework keeps AI focused on measurable business outcomes.

Review Some of the Most Engaging Content

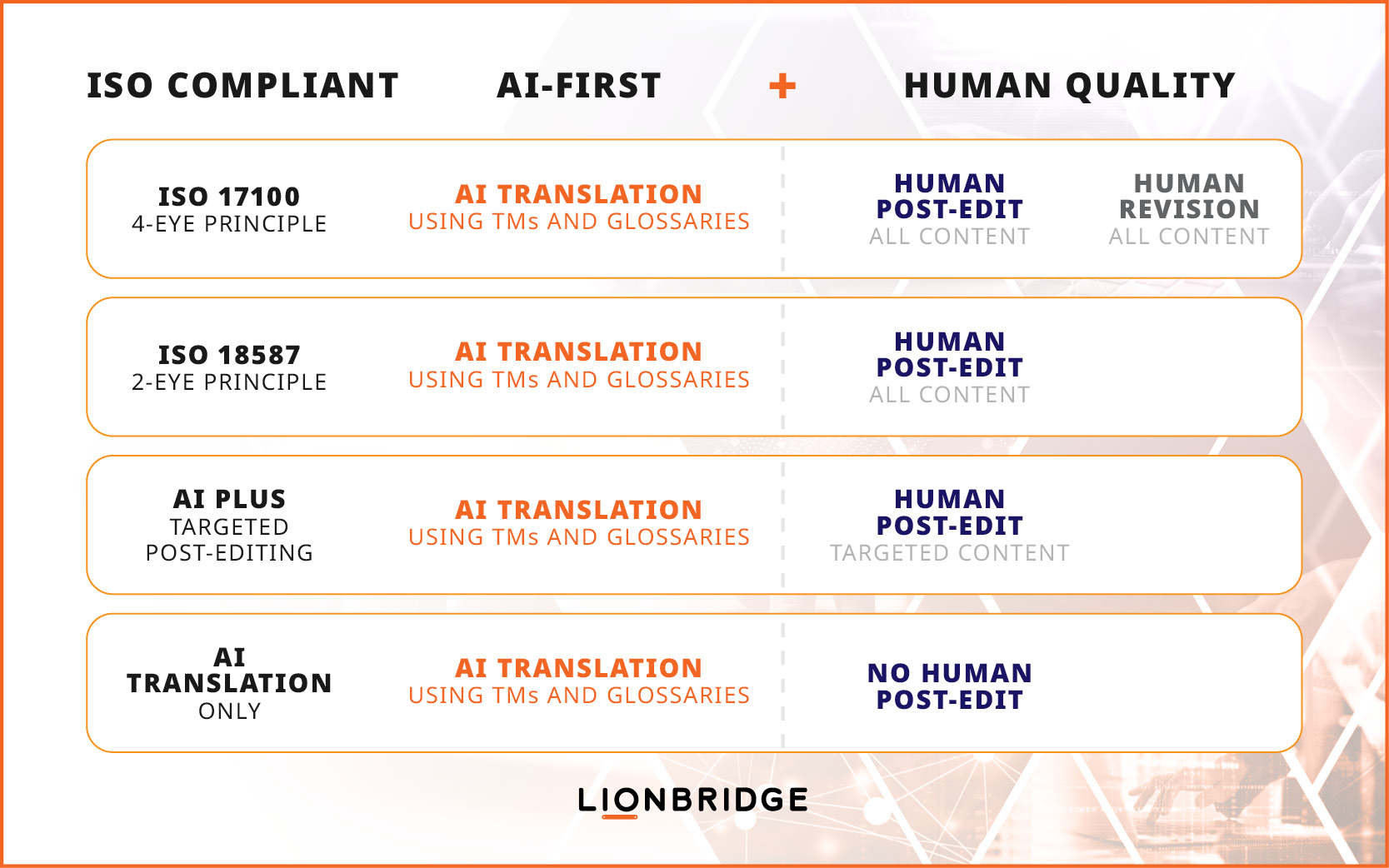

The Best of All Worlds: ISO Compliance, AI-First, Plus Human Quality

Lionbridge’s flexible workflows achieve industry-leading ISO compliance, when required, using advanced AI-powered translation and expert human quality assurance.

Understanding AI Post-Editing: Your Top Questions Answered

We recognize that accuracy has long been a challenge with MT solutions. Lionbridge combines an AI-first approach using automated quality measurement capabilities with a human-in-the-loop approach, allowing us to have a pulse on accuracy at all times.

AI is leveraged to enhance the translation output from traditional tools, such as Translation Memories (TMs), Neural Machine Translation (NMT), and RAG.

Our experience with AI post-editing demonstrates that it can meet scalability needs and maintain quality. Still, human oversight is essential to monitor and adjust the tool for accuracy and specific content requirements.

Our AI post-editing solution starts with an initial assessment of the source content to understand its overall context. Editing and validation steps are performed with this context in mind, ensuring that the produced translations align with content goals and/or profiles.

We designed our AI post-editing solution to be configurable — linguistic prompts can be edited and updated based on automated monitoring and human feedback from linguists and customer Subject Matter Experts (SMEs) involved in the translation process.

Additionally, Lionbridge offers other AI solutions that enable us to perform source analysis and produce reports that capture recommended modifications to source content. We can pair these solutions with our AI post-editing solution for further optimization of the content strategy.

Our AI post-editing solution enables us to define and target where human linguistic involvement is most valuable.

Using our REACH framework, we work with our customers to assess content goals and configure the AI solution to optimize translation output. We can then define varying degrees of human involvement, ensuring the level of effort matches the content needs and profiles.

Handling industry-specific terminology that varies by language and region is challenging, but it can be addressed in several ways.

By leveraging metadata, we can provide a broader context for the AI tools and instruct the LLM to address additional requirements, such as regional nuances — assuming the content is properly labeled and tagged. Lionbridge can support companies with data services to meet this prerequisite.

For terminology that must be adapted by municipality or region, we use a RAG framework. In approaching AI post-editing, we establish guidelines for the LLM to perform specific actions based on defined linguistic rules.

The configuration in our solution also allows for referencing external materials as supplemental examples, helping the LLM generate content that is more tailored to specific contexts.

Since this type of content tends to evolve over time, it is critical to maintain and update linguistic prompts. That’s why our AI solutions are built to be controlled and curated with human oversight.

Yes, our AI-first platform solution is LLM-agnostic and not tied to any specific model. While it has been calibrated with OpenAI GPT models, we can work with customers to leverage their own LLM engines instead.

This scenario would fall under a custom configuration and may require additional evaluation/configuration to ensure the LLM meets quality standards.

For these solutions, we recommend collaborating with our Solutions and Language Technology teams to understand needs, goals, and requirements.

Our AI post-editing solution features configurable linguistic prompts that can be adapted to meet changing content needs and regulatory requirements. While we propose an AI-first process, the control and curation of linguistic prompts remain with humans — computational linguists, Subject Matter Experts (SMEs), and language experts — for optimization and calibration.

Generic, untrained, chatbot-based LLM models struggle to address tone, content, and cultural nuance. Our solution addresses this challenge by using a configurable prompt chain approach, which provides the LLM with specific instructions to define style, tone, and terminology, and linguistic guidelines pertaining to the context of the original content. Through controlled configuration of linguistic parameters and prompts, we can leverage AI post-editing to enhance translation processes.

Our solution begins by prompting the LLM to understand the context of the source content. We use that context to guide the LLM’s decision-making and editing, along with instructions for evaluating terminology, editing fuzzy matches, and validating translations at the segment level — ensuring that the AI operates within defined parameters. Our approach also allows for ongoing updates to linguistic prompts, so if hallucinations do occur, we can adjust and correct the issue as needed.

The human testers are typically computational linguists or linguists with experience in prompt engineering. They are responsible for designing, testing, and updating the parameters of our AI post-editing solution. These linguists validate the output and provide feedback, which is then used to adjust and refine linguistic prompts and parameters.