AI Solutions

Simplifying and Scaling AI Translation Technologies

How AI-driven consolidation will catapult your global content initiatives.

Case Study: Multilingual Retail Marketing

New AI Content Creation Solutions for a Sports and Apparel Giant

- RESOURCES

What is the future of Machine Translation (MT)? It’s a question we ponder day in and day out at Lionbridge.

The major Machine Translation engines — Google NMT, Bing NMT, Amazon, DeepL, and Yandex — made little-to-no quality advancements during 2022. See for yourself by checking our Machine Translation Tracker, the longest-standing MT tracker in the industry. These lackluster results have led us to ask some questions about the current Neural Machine Translation (NMT) paradigm.

- Is the NMT paradigm reaching a plateau?

- Is a new paradigm shift needed, given the engines’ inability to make significant strides?

- What could be next?

We’re betting that Large Language Models (LLMs) — with their massive amounts of content, including multimodality and multilingualism — will have something to do with a future paradigm. Why do we think this? Because of the results of our ground-breaking analysis that compared ChatGPT's translation performance with the performance of MT engines.

ChatGPT, OpenAI’s latest version of its GPT-3 family of LLMs, produced inferior results than designated MT engines — but not by much. Its performance was nothing short of remarkable and undoubtedly has implications for the future of Machine Translation.

Why Might a New Machine Translation Paradigm Be Underway?

Current MT engine trends give us a sense of déjà vu.

During the end of the Statistical Machine Translation era, which NMT replaced, there was virtually no change in MT quality output. In addition, the quality output of different MT engines converged. These things are happening now.

While NMT may not be replaced imminently, if we believe in exponential growth and accelerating returns theories, consider Rule-based MT’s 30-year run and Statistical MT’s decade-long prominence, and note that NMT is now in its sixth year, a new paradigm shift may not be so far away.

What Could Be the Next Machine Translation Paradigm?

Importance advances to LLMs during 2022 have primed the technology to enter the MT field in 2023.

LLMs are generic models that have been trained to do many things. However, we saw some dedicated — or fine-tuned — LLMs make essential advancements in some specific areas by the end of 2022. These developments position the technology to perform translations with some additional training.

For instance, take ChatGPT. OpenAI fine-tuned this latest model to conduct question-and-answer dialogues while still being able to do anything generic LLMs do.

Something similar may happen for translation, with LLMs fine-tuned for translation.

In What Way Would Large Language Models Need To Be Fine-tuned To Handle Translation?

It would be more probable to use LLMs to execute translation if the machines are trained with a more balanced language corpus.

GPT-3’s training corpus was 93% English; only 7% constituted corpora from all the other languages. If GPT-4, whose release seems to be forthcoming, includes more corpora from non-English languages, we may see LLMs that are better able to deal with multilingualism and, therefore, with translation. And this more language-balanced corpus may be the basis for building a fine-tuned model on top of it specialized in translation.

Another interesting aspect of this hypothetical new MT paradigm based on LLMs is the multimodality trend. We may train LLMs using linguistic and other training data, such as images and video. This type of training may provide additional world knowledge for a better translation.

Would Large Language Models Be a Good Alternative to a Neural Machine Translation Paradigm?

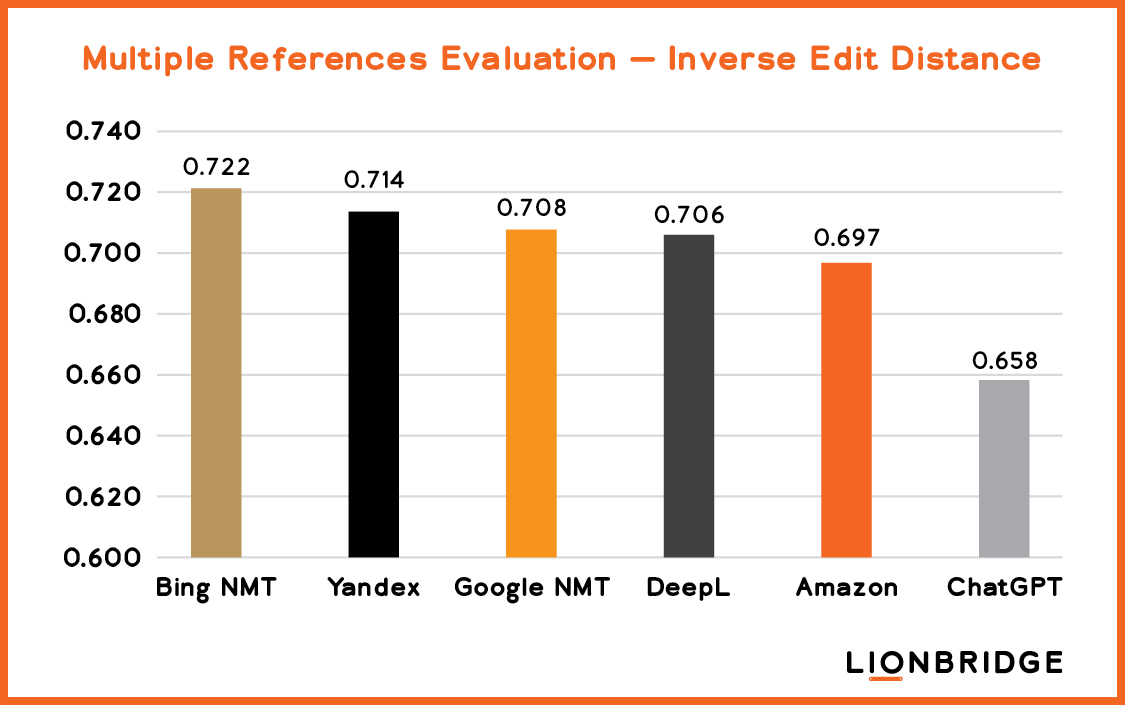

To assess the promise of LLMs to replace the NMT paradigm, we compared ChatGPT’s translation performance to the performance of the five major MT engines we use in our MT Quality Tracking.

As we expected, specialized NMT engines translate better than ChatGPT. But surprisingly, ChatGPT did a respectable job. As shown in Figure 1, ChatGPT performed almost as well as the specialized engines.

How Did We Assess the Quality of ChatGPT vs. Generic MT Engines?

We calculated the quality level of the engines based on the inverse edit distance using multiple references for the English-to-Spanish language pair. The edit distance measures the number of edits a human must make to the MT output for the resulting translation to be as good as a human translation. For our calculation, we compared the raw MT output against 10 different human translations — multiple references — instead of just one human translation. The inverse edit distance means the higher the resulting number, the better the quality.

Figure 1. Comparison of automated translation quality between ChatGPT and the major Machine Translation engines based on the inverse edit distance using multiple references for the English-to-Spanish language pair.

Why Are the Results of ChatGTP’s Translation Performance Noteworthy?

These results of our comparative analysis are remarkable because the generic model has been trained to do many different Natural Language Processing (NLP) tasks as opposed to the single NLP task of translation that MT engines have been trained to do. And even though ChatGPT has not been specifically trained to execute translations, its translation performance is similar to the quality level MT engines produced two or three years ago.

Read our blog to learn more about ChatGTP and localization.

How Might Machine Translation Evolve as a Result of Large Language Models?

Given the growth of LLMs — based on the public’s attention and the significant investments tech companies are making in this technology — we may soon see whether ChatGPT overtakes MT engines or whether MT will start adopting a new LLM paradigm.

MT may use LLMs as a base but then fine-tune the technology specifically for Machine Translation. It would be like what OpenAI and other LLM companies are doing to improve their generic models for specific use cases, such as making it possible for the machines to communicate with humans in a conversational manner. Specialization adds accuracy to the performed tasks.

What Does the Future Hold for Large Language Models in General?

The great thing about Large Language "Generic" Models is that they can do many different things and offer outstanding quality in most of their tasks. For example, DeepMind’s GATO, another general intelligence model, has been tested in more than 600 tasks, with State-of-the-Art (SOTA) results in 400 of them.

Two development lines will continue to exist — generic models, such as GPT, Megatron, and GATO, and specialized models for specific purposes based on those generic models.

The generic models are important for advancing Artificial Generic Intelligence (AGI) and possibly advancing even more impressive developments in the longer term. Specialized models will have practical uses in the short run for specific areas. One of the remarkable things about LLMs is that both lines can progress and work in parallel.

What Are the Implications of a Paradigm Shift in Machine Translation?

As the current Neural Machine Translation technology paradigm reaches its limit and a new dominant Machine Translation technology paradigm emerges — likely based on LLMs — we anticipate some changes to the MT space. Most of the effects will benefit companies, though we expect additional challenges for companies seeking to execute human translations.

Here’s what to expect:

Enhanced quality

There will be a leap in Machine Translation quality as technological advancements resolve long-standing issues, such as Machine Translation and formal vs. informal language and other quality issues pertaining to tone. LLMs may even solve MT engines' biggest problem: their lack of world knowledge. This achievement may be made possible through their multimodality training.

Technologists not only train modern LLMs with vast amounts of text, but they also use images and video. This type of training enables the LLMs to have a more linked knowledge that helps the machines to interpret the texts’ meaning.

Increased content output and a reduced supply of top-notch translators

Companies will be able to create more content faster, and content creation will outpace the growth of the pool of translators capable of translating this content. Even with improved MT and increased translator productivity, the translation community will continue to struggle to satisfy translation demands.

Increased adoption of Machine Translation

As the new technology paradigm becomes available and the quality of Machine Translation improves, the demand for translation services will continue to grow, increasing adoption in more situations and use cases.

The use of Machine Translation to enhance customer experiences

With improved MT quality and the need for more personalized and tailored customer experiences, companies will use MT more frequently to improve their global customers’ digital experiences and create stronger relationships.

What’s the Bottom Line?

Technology companies are demonstrating immense interest in LLM technology. Microsoft is investing $10B in OpenAI. Nvidia, Google, and other companies are also investing heavily in LLM and AI technology.

We are intrigued by what the future holds and will continue to evaluate LLMs. Watch this space to stay up to date on this exciting evolution.

Get in touch

If you’d like to learn how Lionbridge can help you fully capitalize on Machine Translation, contact us today.